The inestimable value of AI: How Machine Learning can help AM project teams achieve their goals and beyond

They each have similar two-letter acronyms, and, for both technologies, it can be hard to separate hype from reality. But Artificial Intelligence (AI) and Additive Manufacturing also overlap in interesting and beneficial ways. In this article, Stephen Warde of Intellegens considers how AI methods such as Machine Learning (ML) could help AM to deliver against expectations – and at the very least, to meet more realistic and commercially essential objectives, such as consistently delivering lighter, stronger components and supporting on-demand manufacturing. [First published in Metal AM Vol. 7 No. 4, Winter 2021 | 15 minute read | View on Issuu | Download PDF]

It’s possible to think of many applications in which Machine Learning can be applied to Additive Manufacturing, from generative design of part geometries to defect detection in manufactured components. In this article, we’ll focus on how the metal AM development process moves from the design or selection of powders towards the successful, repeatable manufacture of parts. Anything that makes this workflow faster and more reliable will be of enormous value, particularly in sectors such as aerospace, where new materials and parts must go through numerous certification cycles, often taking years and costing millions of dollars.

The project teams engaged in this area have what appears to be an ideal application for Machine Learning: they want to optimise a series of processes. Material composition, operating conditions, and process settings all interact with one another and impact the outcomes of these processes in complex and subtle ways. Even though we can describe and understand individual effects through the laws of physics, establishing all of the factors at play and grasping how they interact in multi-dimensional space is beyond straightforward human comprehension. But Machine Learning doesn’t care about understanding those physical laws; it should be able to take data on the inputs and outputs of any process and ‘train’ models to capture how the inputs give rise to the outputs. Such a model could then, for example, predict which inputs will deliver optimal outputs. Can’t we simply apply this to AM?

It turns out that, yes, we can use Machine Learning for data-driven AM. But it’s not so simple! As a result, this potential is only now beginning to be realised.

The challenge

To understand what’s difficult about applying Machine Learning, let’s look at how material and process optimisation typically proceeds and, thus, where the data that could feed a Machine Learning approach comes from. In both the development of new AM materials and the subsequent optimisation of AM processes, experimental design techniques are typically used. The most common are Design of Experiments (DOE), which systematically investigate the effects of varying parameters in the system. Testing every property of interest for every possible combination of material and build parameters is, of course, impossible.

The DOE process sets out to identify a set of experiments that will tell us the most about the system for the least effort, applying various statistical methods to propose which factors to vary. The knowledge and intuition of scientists also helps to narrow the options. This approach can significantly reduce the amount of experimentation required to obtain reliable coverage of the search space. But DOE usually still requires large numbers of tests and a lengthy, expensive process. In AM – which is further complicated by the inherent variability of many processes and machines – DOE approaches can struggle to reduce the experimental workload to a manageable amount.

This is in part because DOE statistical methods, although they improve on ‘Change One Separate variable at a Time (COST)’ approaches, are still limited in their ability to efficiently explore the universe of potential solutions. Machine Learning could potentially learn complex interactions between all of the factors involved, and so find better ways to cut the amount of experiment required. But there is a ‘catch-22’: to train a Machine Learning model typically requires a critical mass of quality data that is complete (i.e., has no ‘holes’) for all of the factors that you wish to model. But real-world experimental datasets are rarely complete, and building them is expensive.

Even constructing a relatively small training dataset that is complete for every factor of interest is difficult. Firstly, this presupposes that you know what the most interesting factors are, which may not be the case in an emerging tech area. Even if you can limit the properties to consider, it is usually not possible to measure every one of these properties for a single test coupon – think of the many mechanical properties that are measured through destructive testing. Building datasets by testing different samples that replicate the same input conditions is also difficult in AM, where performance is notoriously sensitive to tiny variations in factors such as geometry or processing conditions.

Ideally, AM teams would start with the project data that they already have and use Machine Learning to mine that. Because this involves aggregating data from many different tests, and possibly many different projects, the result will be a dataset that, while rich, is inherently sparse. That is, if imagined as a spreadsheet where the rows are materials or parts, and the columns inputs and outputs, then the spreadsheet would be riddled with blank cells. The data, brought together from multiple sources and subject to the vagaries of AM processes, will also be noisy. Faced with real-world experimental data, Machine Learning usually fails. These challenges can be mitigated, to some extent, with a large data processing effort and the application of smart data science, but each additional step reduces the likelihood of Machine Learning being applied in practice by AM teams.

Solving the ‘sparse, noisy data’ problem for alloy design

Meeting the ‘sparse, noisy data’ challenge for Machine Learning, specifically in the context of materials design, has been a focus of research by Dr Gareth Conduit and his collaborators at the University of Cambridge. The team developed a novel Machine Learning tool that has specialist capabilities to handle sparse experimental data. Working with Rolls-Royce, the tool was validated for the design of alloys. These include two nickel-base alloys for jet engines, two molybdenum alloys for forging hammers, and an alloy for additively manufacturing combustors. Each alloy had around a dozen individual physical properties that were predicted to match or exceed commercially available alternatives, and predictions have been experimentally verified.

This Machine Learning method has been commercialised by university spin-out company Intellegens as the Alchemite™ software. The aim, as Gareth Conduit explains, is to “change the contemporary approach to materials design, which comprises many cycles of trial and improvement. We use Machine Learning to short-circuit this approach, designing a material that fulfils all of the target criteria.”

Since Intellegens was founded in 2017, the technology has been put to work on a wide variety of applications, within and beyond the materials and process field. In AM, these included a further example of material design – this time, working with GKN Aerospace and the ATI Boeing Accelerator to identify a new titanium alloy that could maximise thermal conductivity without diminishing mechanical properties for heat exchanger applications. Marko Bosman, Chief Technologist at GKN Aerospace highlighted the benefits of “a powerful tool for virtual experimentation, unleashing unexplored territory in the search for better metal alloys tailored to future applications.”

The virtual experimentation referenced by Bosman does not replace all physical experiments, but it helps researchers to choose which of those experiments to do. Gareth Conduit points to the importance in this process of accurate uncertainty quantification in the Machine Learning model: “We do not just predict a set of properties. We have invested a lot of effort in ensuring that our Machine Learning algorithm gives the scientist detailed information about the uncertainty of every value – that is, how much trust they should place in it. This is essential to help scientists identify which candidate materials are most likely to succeed.”

Machine Learning can also be used to enable adaptive design of experiments. Here, predictive power is focused not on proposing a new material, but on the continuous improvement of the Machine Learning model itself. The tool identifies what missing data would most effectively reduce the uncertainty in the model’s predictions, and can even factor in the cost of particular experiments to propose which tests should be done next in order to have the most positive impact on improving the model for the least cost.

This combination of capabilities – designing new materials, virtual experimentation, and adaptive design of experiments – can lead to dramatic results for materials development once they become feasible from a starting point of sparse, noisy experimental data. The Rolls-Royce project, for example, saw new materials identified with a 90% reduction in the amount of experiment required.

Project MEDAL – optimising process parameters

If Machine Learning has demonstrated its viability for improving the design of new AM materials, what about the next step in the AM process: optimising process parameters to enable consistent builds of parts from these new feedstock materials? Process parameter optimisation is the focus of Project MEDAL, a collaboration based at the University of Sheffield’s Additive Manufacturing Research Centre (AMRC) North West, involving Boeing, and supported by the UK’s National Aerospace Technology Exploitation Program (NATEP).

Project MEDAL concentrates on Laser Beam Powder Bed Fusion (PBF-LB) methods, as it is the most widely used metal AM technology in industry. The project aims to dramatically reduce the amount of experimentation required to identify the right process parameters to manufacture high density, high strength PBF-LB parts from a new feedstock material. Ian Brooks, Technical Fellow and the project lead at AMRC (Fig. 2), describes the traditional process development cycle as: building and treating test samples, testing them, and then repeating the process with modifications until it converges on an acceptable solution – usually a balance between performance and cost. Just as we saw with materials design, the key challenge is to reduce the amount of experiment required in a situation with a large number of variables that interact in a complex manner. The idiosyncrasies of AM machines add to the difficulty. Brooks illustrates how costly this can be: “A standard test methodology here would be taking a sample, mounting it, polishing it, etching it, and then analysing it and generating a response variable from a microscope or similar – so time-consuming and cost-intensive.”

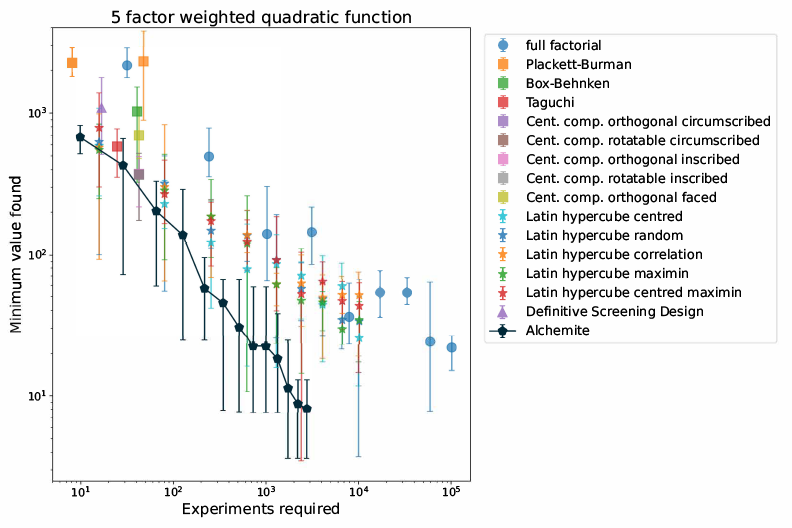

Presenting progress so far in the project at a recent webinar, Ian Brooks showed a comparison between using the Machine Learning and a range of standard DOE methods in identifying the experiments required to find the process parameters that would minimise a key process outcome. Machine Learning “was able to converge on the optimum solution with far fewer experiments.” Again, order-of-magnitude reductions were found for some of the response variables studied. Work to build on these findings is on-going (Fig. 3).

“The opportunity for this project is to provide end-users with a validated, economically viable method of developing their own powder and parameter combinations,” Brooks explained. “[The findings] will have applications for other sectors including automotive, space, construction, oil and gas, offshore renewables, and agriculture.”

Lukas Jiranek, AM Engineer at Boeing Research & Technology, explained the company’s involvement in the project: “Across the company, Boeing employees are scaling up AM to produce metal and polymer components for a number of Boeing products and applications. There are currently over 70,000 AM components flying on various Boeing platforms. With these multiple efforts and the data-rich AM process chain, Machine Learning has the potential to be a key technology in accelerating the further development and adoption of AM. Project MEDAL is a valuable step forward in creating and proving-out standardised approaches to Machine Learning for data-driven AM process parameter development.”

Machine Learning in practice

This focus on practical implementation raises an important question. Can novel Machine Learning branch out beyond research projects and become a mainstream tool for AM project teams? “That’s already happening,” states Ben Pellegrini, CEO at Intellegens. “But it does require us to think hard not just about whether the technology works, but also about its deployability. We call this the ‘ML Ops’ challenge – Machine Learning hasn’t yet had the impact it could have because it doesn’t get operationalised; it’s just too hard to use. Of course, in many cases, that’s because people have not even been able to get past square one with sparse, noisy, experimental data. But even as we solve that problem, we need to be thinking about who will use the technology, and how.”

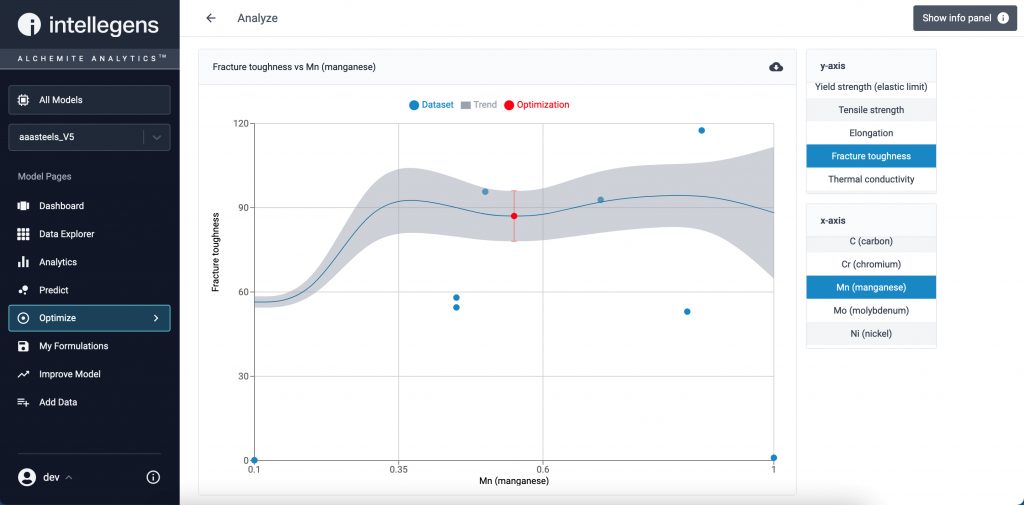

According to Pellegrini, that means thinking about two key classes of user. “The power users who will push the boundaries are data science teams – and they, quite rightly, will not be constrained to using one Machine Learning tool. They want methods that will easily plug-and-play with their own in-house tools and other systems, using open standards and scripting languages like Python.” But to really impact AM projects, validated models need to get into the hands of domain experts – scientists and engineers – so that they can combine new insights into their data with their expert intuition. This is a key message that Intellegens has heard from customers, said Pellegrini: “Alongside smart methods, they are guiding us to place an equal focus on delivery through easy-to-use desktop software that generates useful analytics without too much need to pre-process data or specify the detailed assumptions underlying the model” (Fig. 4).

Businesses also need to think about how Machine Learning can integrate with their data management infrastructure – both to push the right data into the Machine Learning models, and to capture results and the models themselves so that they can be shared and reused. The leader in materials data management, engineering simulation software giant Ansys, is one company that has been thinking about this. “Most companies for whom materials and process data is a vital corporate asset now have systematic programmes in place to digitalise that asset, thus protecting valuable intellectual property, and making it much easier to exploit,” explained Sakthivel Arumugam, Senior Product Manager at Ansys. “We provide a solution to enable that specialist data management with Granta MI™, and we’ve recognised that our customers now want Machine Learning embedded into that infrastructure for their Additive Manufacturing material and process data.”

Conclusion

So, Machine Learning does have the demonstrated potential to help AM project teams, both in the design of new AM materials and in the processing of those materials to build AM parts. Practical implementations that deploy the technology for use in AM are gaining ground. We’ve seen a few specific examples, and there are sure to be many more.

But we’ve also seen some of the challenges that need to be overcome, and thus the criteria against which any solutions in this area need to be measured. Can they overcome the difficulties of training Machine Learning models with real-world, sparse, noisy, high-dimensional data? Do they provide the right combination of tools both to design new materials and processes and to support adaptive design of experiments? Do they provide an accurate understanding of the uncertainty in their predictions, enabling rational decision-making? Can they be deployed effectively both to data science teams, who want to combine and customise best-in-class Machine Learning, and to AM scientists and engineers, who just need a pragmatic tool to get useful insights from their data? Machine Learning solutions that tick these boxes can help to deliver more reliable, repeatable AM projects, sooner, and at lower cost – something real behind the hype for both AM and AI.

Author

Stephen Warde

Marketing Manager

Intellegens

Eagle Labs

28 Chesterton Road

Cambridge

CB4 3AZ

UK